Crashplan Max Memory Via Docker on a Synology NAS

TL;DR

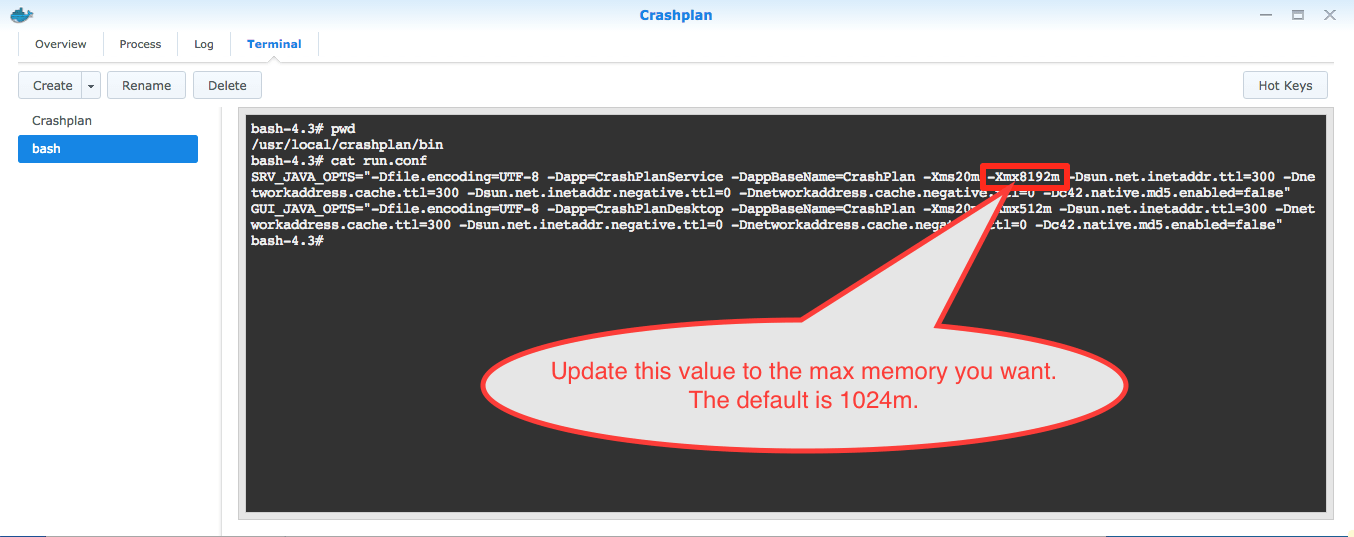

Update the /usr/local/crashplan/bin/run.conf with the maximum value you want your java heap to be allowed to use:

SRV_JAVA_OPTS="-Dfile.encoding=UTF-8 -Dapp=CrashPlanService -DappBaseName=CrashPlan -Xms20m -Xmx1024m -Dsun.net.inetaddr.ttl=300 -Dnetworkaddress.cache.ttl=300 -Dsun.net.inetaddr.negative.ttl=0 -Dnetworkaddress.cache.negative.ttl=0 -Dc42.native.md5.enabled=false"

Backstory

If you’re like me and you have an awesome Synology DiskStation (I have a 1515+), you probably want to back up the all important data that you are storing. Whether it is family photos or business documents, you typically will want to have an offsite backup in the event of a catastrophic disk failure or some other disaster such as a flood or fire. Scott Hanselman has an excellent blog post outlining why you should backup your data as well as how thorough you should be about it. Make no mistake, people think backups and redundant backups are a waste of time and money until they actually need them. Please take a moment and read Scott’s excellent post. Even though it is several years old, every point he makes is very relevant in today’s world.

Another quick tidbit that most people overlook is that Dropbox, iCloud, OneDrive, or Google Drive usually isn’t an adequate backup for working data. In most cases, cloud providers will give you access to the most recent version of a synchronized file. This means that if you accidentally save your thesis paper after fat fingering select all + delete, you’re in trouble. Or, perhaps, you hit delete on the wrong file without realizing it. While cloud providers are great and provide an excellent service of synchronizing data and files between devices, they don’t usually provide a good disaster recovery scenario when trying to retrieve old data that was overwritten or accidentally deleted.

I highly recommend Crashplan for all of your personal and small business disaster recovery needs. Their pricing is incredibly great for their service (unlimited storage??). Other comparable services are much more expensive.

Now that you’ve decided that Crashplan is the best thing since sliced bread, you’ll want to install it on your Synology NAS in order to backup your centralized data. This can be a little daunting as Crashplan still hasn’t issued an official package for Synology NAS devices.

Previously, in order to get this working, you had to add an unofficial package source and install a package that worked around Crashplan’s install limitations. Again, our friend Scott Hansleman has an excellent post about how to do this. Unfortunately, Crashplan has a tendency to automatically upgrade itself and the update process doesn’t play nice with Synology DSM’s version of Linux. This resulted in a lot of pain every few months when Crashplan autoupdated. You really wouldn’t realize there was an issue unless you happened to check your backup statuses or had the Crashplan service email you fairly often if no backup was received. Chris Nelson’s article details what was involved.

As with all things, eventually Crashplan’s update resulted in an update which wasn’t easily repaired. Enter Docker! Now we can run a version of Linux that is supported by Crashplan in a container, thus allowing updates to install properly. If you want to go down this road, here are the directions to do so. Thanks Mike!

A small caveat here, I did have to modify how my volumes were being attached to the container. As some others stated, I had to manually add them via the DSM UI. Attaching them via the command line did not work as expected for me. Also, I needed to adjust some pathing so that I could adopt my previous Crashplan backup without sending all of my data to the servers again.

After all of this work, we now have a working backup solution that will backup our data periodically, storing versions and diffs so that you can retrieve older versions of your files if necessary. Huzzah! But wait…something’s wrong! Crashplan keeps…well…crashing!

If you find something like this in the logs:

backup42.service.backup.BackupController] OutOfMemoryError occurred...RESTARTING! message=OutOfMemoryError in BackupQueue!You may be backing up so much data that the Crashplan engine needs more memory in order to continue functioning. That doesn’t make sense, you say. I’ve got lots of memory in my Synology. I even upgraded my memory to 16 GB like this guy did!

Here’s the big gotcha…you have to configure Crashplan to specify it’s max Java heap size in one of its configuration files. Crashplan support has a decent article explaining why you need to do so. Yes, you can do it through the GUI like the article mentioned. Ultimately, you end up modifying the run.conf file. I changed mine to allow Crashplan to use up to 8GB of memory if it needed to do so.

I had an issue in which my run.conf file was being reset to default. I’m not exactly certain why this was happening, but it could have been due to a bad upgrade scenario. Every time the container was restarted (due to a power event, DSM version upgrade, or something similar), the max memory was reset back to 1024. I noticed in the logs that Crashplan was upgrading and restarting itself each time the container was restarted, so I’m guessing that was the root cause of the issue. After spending some time trying to track it down, I simply downloaded the latest version of the docker image, launched a new container using the DSM UI, set it up exactly the same as my original container (mapping the exact same ports and volumes), copied the .identity and .ui_info files from the original container into the /var/lib/crashplan folder, and modified the run.conf to set the max memory size to 8GB (8192m). After that, I simply restarted the container and the memory setting was respected between restarts. I did have to log into my account again when connecting with the GUI running on my macbook pro to see if the backups were processing properly.

Finally, though, I have an install of Crashplan that should work properly with upgrades and will not crash when backing up my large data repository.